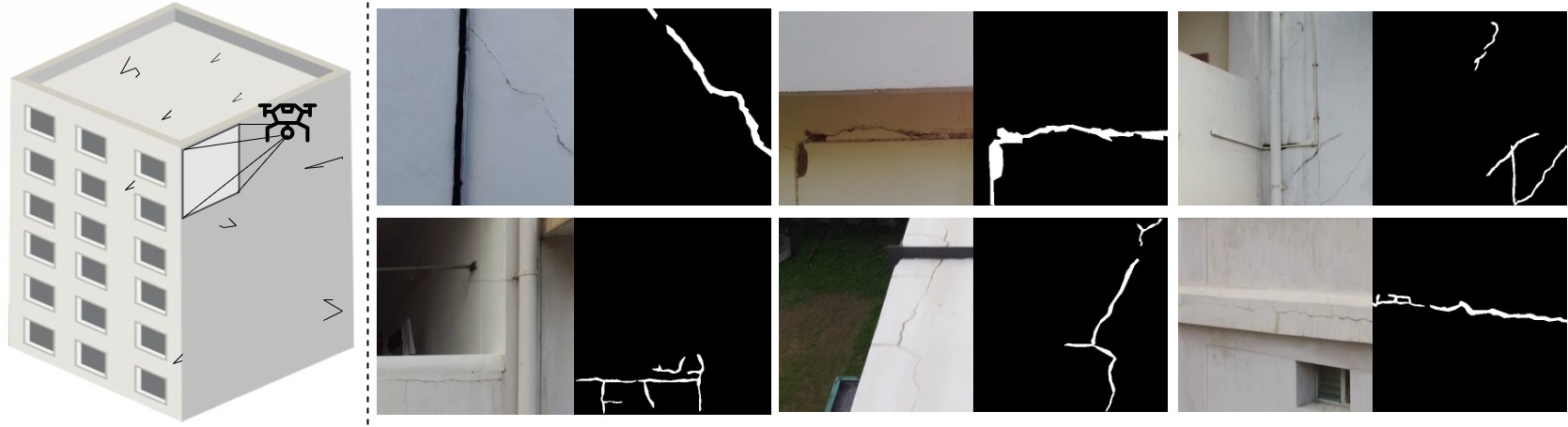

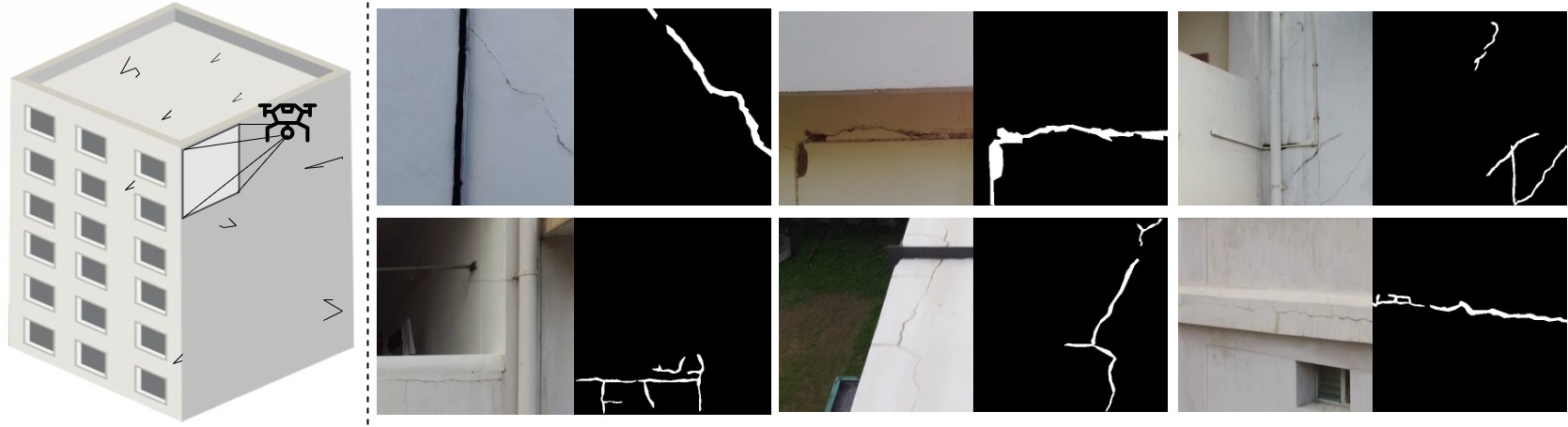

| In order to do crack segmentation based on pictures of a buildings taken by a drone:

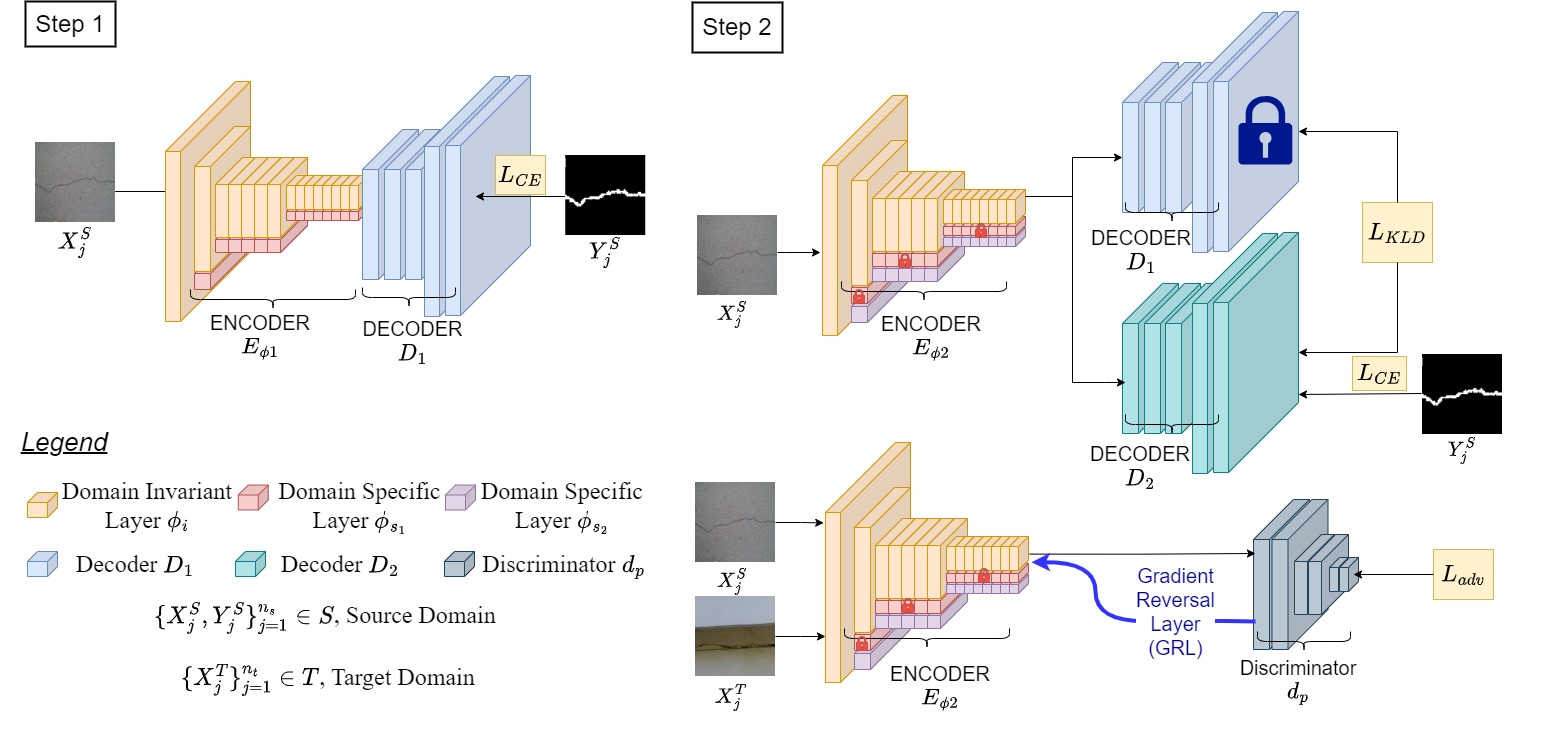

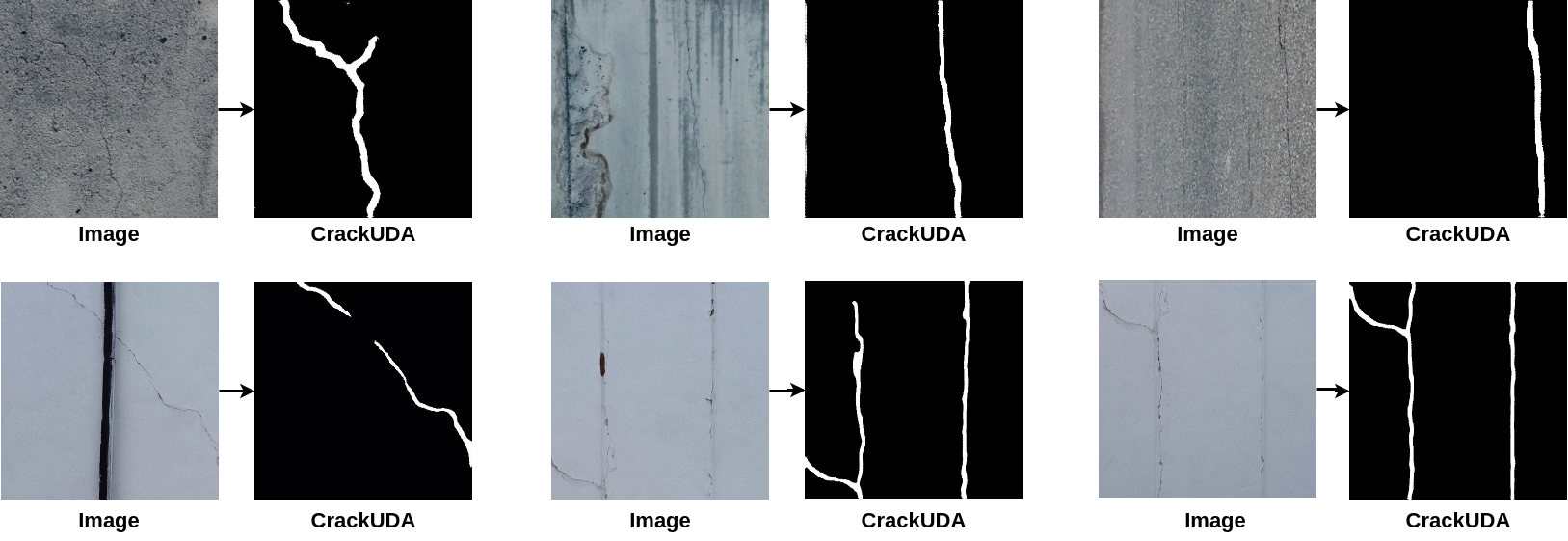

- We propose CrackUDA, a novel incremental UDA approach that ensures robust adaptation and effective

crack segmentation.

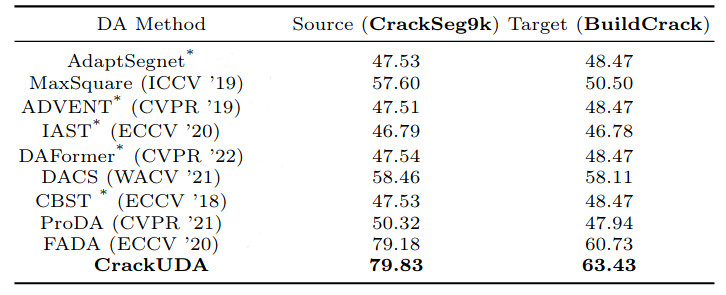

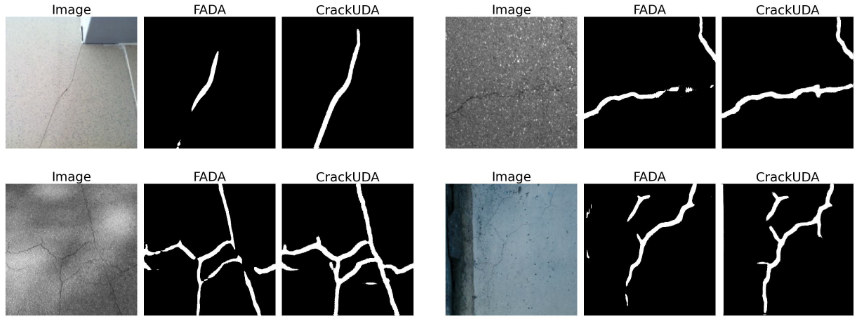

- We demonstrate the effectiveness of CrackUDA by

achieving higher accuracy in the challenging task of

building crack segmentation, surpassing the state-of-

the-art UDA methods. Specifically, CrackUDA yields

an improvement of 0.65 and 2.7 mIoU on the source

and target domains, respectively.

- We introduce BuildCrack, a new building crack dataset collected via a drone.

|